Caudal anaesthesia and ilioinguinal block are effective, safe anaesthetic techniques for paediatric inguinal herniotomy. This review article aims to educate medical students about these techniques by examining their safety and efficacy in paediatric surgery, as well as discussing the relevant anatomy and pharmacology. The roles of general anaesthesia in combination with regional anaesthesia, and that of awake regional anaesthesia, are discussed, as is the administration of caudal adjuvants and concomitant intravenous opioid analgesia.

Introduction

Inguinal hernia is a common paediatric condition, occurring in approximately 2% of infant males, of slightly reduced incidence in females, [1] and as high as 9-11% in premature infants. [2] Inguinal herniotomy, the reparative operation, is most commonly performed under general anaesthesia with regional anaesthesia; however, some experts in caudal anaesthesia perform the procedure with awake regional anaesthesia. Regional anaesthesia can be provided via the epidural (usually caudal) or spinal routes, or by blocking peripheral nerves with local anaesthetic agents. The relevant techniques and anatomy will be discussed, as will side effects and safety considerations, and the pharmacology of the most commonly used local anaesthetics. The role of general anaesthesia, awake regional anaesthesia and the use of adjuvants in regional anaesthesia will be discussed, with particular focus on future developments in these fields.

Anatomy and technique

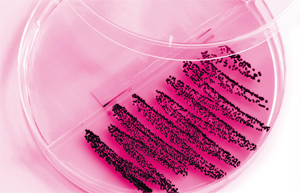

The surgical field for inguinal herniotomy is supplied by the ilioinguinal and iliohypogastric nerves, arising from the first lumbar spinal root, as well as by the lower intercostal nerves, arising from T11 and T12. [3] Caudal anaesthesia is provided by placing local anaesthetic agents into the epidural space, via the caudal route. It then diffuses across the dura to anaesthetise the ventral rami, which supply sensory (and motor) nerves. Thus, the level of anaesthesia needs to reach the lower thoracic region to be effective. The caudal block is usually commenced after the induction of general anaesthesia. With the patient lying in the left lateral position, the thumb and middle finger of the anaesthetist’s left hand are placed on the two posterior superior iliac spines, the index finger then palpates the spinous process of the S4 vertebra. [4] Using sterile technique, a needle is inserted through the sacral hiatus to pierce the sacrococcygeal ligament, which is continuous with the ligamentum flavum (Figure 1). Correct placement of the needle can be confirmed by the “feel” of the needle passing through the ligament, the ease of injection and, if used, the ease of passing a catheter through the needle. The absence of spontaneous reflux, or aspiration, of cerebrospinal fluid or blood should be confirmed before drugs are injected into the sacral canal, which is continuous with the lumbar epidural space. [5] Ilioinguinal block is achieved by using sterile technique to insert a needle inferomedially to the anterior superior iliac spine and injecting local anaesthetic between the external oblique and internal oblique muscles, and between the internal oblique and the transversus abdominis. [6] These injections cover the ilioinguinal, iliohypogastric and lower intercostal nerves, anaesthetising the operating field, including the inguinal sac. [3] Commonly, these nerves are blocked by the surgeon during the surgical process when she/he can apply local anaesthesia directly to the nerves. Ultrasound guidance has enabled the more accurate placement of injections, allowing lower doses to be used [7] and improving success rates, [8] leading somewhat to a resurgence of the technique. [4] Pharmacological aspects Considerable discussion has arisen regarding which local anaesthetic agent is the best choice for caudal anaesthesia: bupivacaine or the newer pure left-isomers levobupivacaine and ropivacaine. A review by Casati and Putzu examined evidence regarding the toxicology and potency of these new agents in both animal and human studies. Despite conflicting results in the literature, this review ultimately suggests that there was a very small difference in potency between the agents: bupivacaine is slightly more potent than levobupivacaine, which is slightly more potent than ropivacaine. [9] Breschan et al. suggested that a caudal dose of 1 mL/kg of 0.2% levobupivacaine or ropivacaine produced less post-operative motor blockade than 1 mL/kg 0.2% bupivacaine. [10] This result could be consistent with a mild underdosing of the former two agents in light of their lesser potency, rather than intrinsic differences in motor effect. Doses for ilioinguinal nerve block are variable, given the blind technique commonly employed and the need to obtain adequate analgesia. Despite this, the maximum recommended single shot dose is the same for all three agents: neonates should not exceed 2 mg/kg, and children should not exceed 2.5 mg/kg. [11] Despite multiple studies showing minimal yet statistically significant differences, all three agents are nonetheless comparably effective local anaesthetic agents. [9]

When examining toxicity of the three agents discussed above, Casati and Putzu reported that the newer agents (ropivacaine and levobupivacaine) were less toxic than bupivacaine, resulting in higher plasma concentrations before the occurrence of signs of CNS toxicity, and with less cardiovascular toxicity occurring at levels that induce CNS toxicity. [9] Bozkurt et al. determined that a caudal dose of 0.5 mL/kg of 0.25% (effectively 1.25 mg/kg) bupivacaine or ropivacaine resulted in peak plasma concentrations of 46.8 ± 17.1 ng/mL and 61.2 ± 8.2 ng/mL, respectively. These are well below the levels at which toxic effects appear for bupivacaine and ropivacaine, at 250 ng/mL and 150-600 ng/mL, respectively. [12] The larger doses required for epidural anaesthesia and peripheral nerve blocks carry the increased risk of systemic toxicity, so the lesser toxic potential of levobupivacaine and ropivacaine justifies their use over bupivacaine. [9,13] However, partly due to cost bupivacaine remains in wide use today. [14]

Caudal anaesthesia requires consideration of two aspects of dose: concentration and volume. The volume of the injection controls the level to which anaesthesia occurs, as described by Armitage:

- 0.5 mL/kg will cover sacral dermatomes, suitable for circumcision

- 0.75mL/kg will cover inguinal dermatomes, suitable for inguinal herniotomy

- 1 mL/kg will cover up to T10, suitable for orchidopexy or umbilical herniotomy

- 1.25 mL/kg will cover up to mid-thoracic dermatomes. [15]

It is important to ensure both an adequate amount of local anaesthetic (mg/kg) and an adequate volume for injection (mL/kg) are used.

Efficacy of caudal and ilioinguinal blocks

Ilioinguinal block and caudal anaesthesia both provide excellent analgesia in the intraoperative and postoperative phases. Some authors suggest that ultrasound guidance in ilioinguinal block can increase accuracy of needle placement, allowing a smaller dose of local anaesthetic. [16] Thong et al. reviewed 82 cases of ilioinguinal block without ultrasonography, and found similar success rates to other regional techniques, [17] however, this was a small study. Markham et al. used cardiovascular response as a surrogate marker for intraoperative pain and found no difference between the two techniques. [18] Other studies have shown that both techniques provide similarly effective analgesic profiles in terms of post-operative pain scores, [19] duration or quality of post-operative analgesia, [20] and post-operative morphine requirements. [21] Caudal anaesthesia has a success rate of up to 96%, [22] albeit with 25% of patients requiring more than one attempt. In contrast, blind ilioinguinal block has a success rate of approximately 72%. [23] Willschke et al. quoted success rates of 70-80%, which improved with ultrasound guidance. [16] In a small study combining the two techniques, Jagannathan et al. explored the role of ultrasound-guided ilioinguinal block after inguinal herniotomy surgery performed under general anaesthetic with caudal block. With groups randomised to receive injections of normal saline, or bupivacaine with adrenaline, they found that the addition of a guided nerve block at the end of the surgery significantly decreased post-operative pain scores for the bupivacaine with adrenaline group. [24] This suggests that the two techniques can be combined for post-operative analgesia. Ilioinguinal block is not suitable as the sole method of anaesthesia, as its success rate is highly variable and the block not sufficient for surgical anaesthesia, whereas caudal block can be used as an awake regional anaesthetic technique. Both techniques are suitable for analgesia in the paediatric inguinal herniotomy setting.

Complications and side effects

Complications of caudal anaesthesia are rare at 0.7 per 1000 cases. [5] However, some of these complications are serious and potentially fatal:

- accidental dural puncture, leading to high spinal block

- intravascular injection

- infection and epidural abscess formation

- epidural haematoma. [4,13]

A comprehensive review of 2,088 caudal anaesthesia cases identified 101 (4.8%) cases in which either the dura was punctured, significant bleeding occurred, or a blood vessel was penetrated. Upon detection of any of these complications, the procedure was ceased. [25] This is a relatively high incidence; however, these were situations where potentially serious complications were identified prior to damage being done by injecting the local anaesthetic. The actual risk of harm occurring is unknown, but is considered to be much lower than the incidence of these events. Polaner et al. reviewed 6011 single shot caudal blocks, and identified 172 (2.9%) adverse events, including eighteen positive test doses, five dural punctures, 38 vascular punctures, 71 abandoned blocks and 26 failed blocks. However, no serious complications were encountered as each of these adverse events were detected early and managed. [26] Methods of minimising the risk of these complications include test doses under ECG monitoring for inadvertent vascular injection (tachycardia will be seen) or monitoring the onset of subarachnoid injection (rapid anaesthesia will occur). [13] Ilioinguinal blocks, as with all peripheral nerve blocks, are inherently less risky than central blockade. Potential complications include:

- infection and abscess formation

- mechanical damage to the nerves.

More serious complications identified at case-report level include cases of:

- retroperitoneal haematoma. [27]

- small bowel perforation. [28]

- large bowel perforation. [29]

Polaner et al. reviewed 737 ilioinguinal-iliohypogastric blocks, and found one adverse event (positive blood aspiration). [26] This low morbidity rate was attributed to the widespread use of ultrasound guidance. [26] A number of studies have examined the side effect profiles of both techniques:

- Time to first micturition has conflicting evidence – Markham et al. suggest delayed first micturition with caudal anaesthesia

- compared to inguinal block, [18] but others found no difference. [19,20]

- Post-operative time to ambulation is similar. [18,19]

- Post-operative vomiting has similar incidence, [18-20] and has been shown to be affected more by the accompanying method of general anaesthetic than the type of regional anaesthesia, with sevoflurane inhalation resulting in more post-operative vomiting than intravenous ketamine and propofol. [30]

- Time in recovery bay post-herniotomy was 45 ± 15 minutes for caudal, and 40 ± 9 minutes (p<0.02) for ilioinguinal; [19] however, this statistically significant result has little effect on clinical practice.

- Time to discharge (day surgery) was 176 ± 33 minutes for caudal block, and shorter for ilioinguinal block at 166 ± 26 minutes (p<0.02). [19] Again, these times are so similar as to have little practical effect. These studies suggest that the techniques have similar side effect incidences and postoperative recovery profiles, and where differences exist, they are statistically but not clinically significant.

Use of general anaesthesia in combination with caudal anaesthesia or ilioinguinal block A topic of special interest is whether awake regional, rather than general, anaesthesia should be used. Although the great majority of inguinal herniotomy is performed with general and regional anaesthesia, the increased risk of post-operative apnoea in neonates after general anaesthesia (particularly in ex-low birth weight and preterm neonates) is often cited. Awake regional anaesthesia is therefore touted as a safer alternative. As described above, ilioinguinal block is unsuitable for use as an awake technique, but awake caudal anaesthesia has been successfully described and practised. Geze et al. reported on performing awake caudal anaesthesia in low birth weight neonates and found that the technique was safe; [31] however, this study examined only fifteen cases and conclusions regarding safety drawn from such a small study are therefore limited. Other work in the area has also been limited by cohort size. [32-35] Lacrosse et al. noted the theoretical benefits of awake caudal anaesthesia for postoperative apnoea, but recognised that additional sedation is often necessary, and in a study of 98 patients, found that caudal block with light general anaesthesia using sevoflurane was comparable in terms of safety to caudal anaesthesia alone, and had the benefit of offering better surgical conditions. [36] Additionally, the ongoing concerns around neurotoxicity of general anaesthetic agents to the developing brain need further evaluation before recommendations can be made. [37] More research is needed to fully explore the role and safety of awake caudal anaesthesia, [38] and it currently remains a highly specialised area of practice, limited mainly to high risk infants. [39]

Adjuncts to local anaesthetics

There are many potential adjuncts for caudal anaesthesia, but ongoing concerns about their safety continue to limit their use. The effect of systemic opioid administration on the quality of caudal anaesthesia has been discussed in the literature. Somri et al. studied the administration of general anaesthesia and caudal block both with and without intravenous fentanyl, and measured plasma adrenaline and noradrenaline at induction, end of surgery and in recovery as a surrogate marker for pain and stress. They found adding intravenous fentanyl resulted in no differences in plasma noradrenaline, and significantly less plasma adrenaline only in recovery. [40] Somri et al. questioned the practical significance of the result for adrenaline, noting no clinical difference in terms of blood pressure, heart rate or end-tidal CO2. Thus they suggested that general anaesthesia and caudal anaesthesia adequately block the stress response, and therefore there is no need for intraoperative fentanyl. [40] Interestingly, they also found no difference in post-operative analgesia requirements between the two groups. [40] Other authors noticed no difference in analgesia for caudal anaesthesia with or without intravenous fentanyl, and found a significant increase in post-operative nausea and vomiting with fentanyl. [41] Khosravi et al. found that pre-induction tramadol and general anaesthesia are slightly superior to general anaesthesia and ilioinguinal block for herniotomy post-operative pain relief, but suggested that the increased risk of nausea and vomiting outweighed the potential benefits. [42] Opioids have a limited role in caudal injection due to side effects, including respiratory depression, nausea, vomiting and urinary retention. [43] Both ilioinguinal block and caudal block are effective on their own, and that the routine inclusion of systemic opioids for regional techniques in inguinal herniotomy is unnecessary and potentially harmful. Adding opioids to the caudal injection has risks that outweigh the potential benefits. [44]

Ketamine, particularly the S enantiomer which is more potent and has a lower incidence of agitation and hallucinations than racemic ketamine, [44] has been studied as an adjuvant for caudal anaesthesia. Mosseti et al. reviewed multiple studies and found ketamine to increase the efficacy of caudal anaesthesia when combined with local anaesthetic compared to local anaesthetic alone. [44] Similar results were found for clonidine. [44] This is consistent with other work comparing caudal ropivacaine with either clonidine or fentanyl as adjuvants, which found clonidine has a superior side effect profile. [45] However, the use of caudal adjuvants has been limited due to concerns with potential neurotoxicity (reviewed by Jöhr and Berger). [4]

Local anaesthetic with adrenaline has been used to decrease the systemic absorption of short acting local anaesthetics and thus enhance the duration of blockade. Its sympathetic nervous effects are also useful for identifying inadvertent intravascular injection, which results in increased heart rate and increased systolic blood pressure. The advent of longer acting local anaesthetics has led to a decline in the use of adrenaline as an adjuvant to local anaesthetics, [44] and the validity of test doses of adrenaline has been called into doubt. [46]

Summary and Conclusion

Both caudal and ilioinguinal blocks are effective, safe techniques for inguinal herniotomy (Table 1). With these techniques there is no need for routine intravenous opioid analgesia, thus reducing the incidence of problems from these drugs in the postoperative period. The role of ultrasound guidance will continue to evolve, bringing new levels of safety and efficacy to ilioinguinal blocks. Light general anaesthesia with regional blockade is considered the first choice, with awake regional anaesthesia for herniotomy considered to be a highly specialised field reserved for a select group of patients. However, the ongoing concerns of neurotoxicity to the developing infant brain may fundamentally alter the neonatal anaesthesia landscape in the future.

Conflict of interest

None declared.

Acknowledgements

Associate Professor Rob McDougall, Deputy Director Anaesthesia and Pain Management, Royal Children’s Hospital Melbourne, for providing the initial inspiration for this review.

Correspondence

R Paul: r.paul@student.unimelb.edu.au

References

[1] King S, Beasley S. Surgical conditions in older children. In: South M, Isaacs D, editors. Practical Paediatrics. 7 ed. Australia: Churchill Livingstone Elsevier; 2012. p. 268-9.

[2] Dalens B, Veyckemans F. Anesthésie pédiatrique. Montpellier: Sauramps Médical; 2006.

[3] Brown K. The application of basic science to practical paediatric anaesthesia. Update in Anaesthesia. 2000(11).

[4] Jöhr M, Berger TM. Caudal blocks. Paediatr Anaesth. 2012;22(1):44-50.

[5] Raux O, Dadure C, Carr J, Rochette A, Capdevila X. Paediatric caudal anaesthesia. Update in Anaesthesia. 2010;26:32-6.

[6] Kundra P, Sivashanmugam T, Ravishankar M. Effect of needle insertion site on ilioinguinaliliohypogastric nerve block in children. Acta Anaesthesiol Scand. 2006;50(5):622-6.

[7] Willschke H, Bosenberg A, Marhofer P, Johnston S, Kettner S, Eichenberger U, et al. Ultrasonographic-guided ilioinguinal/iliohypogastric nerve block in pediatric anesthesia: What is the optimal volume? Anesth Analg. 2006;102(6):1680-4.

[8] Willschke H, Marhofer P, Machata AM, Lönnqvist PA. Current trends in paediatric regional anaesthesia. Anaesthesia Supplement. 2010;65:97-104.

[9] Casati A, Putzu M. Bupivacaine, levobupivacaine and ropivacaine: are they clinically different? Best Pract Res, Clin Anaesthesiol. 2005;19(2):247-68.

[10] Breschan C, Jost R, Krumpholz R, Schaumberger F, Stettner H, Marhofer P, et al. A prospective study comparing the analgesic efficacy of levobupivacaine, ropivacaine and bupivacaine in pediatric patients undergoing caudal blockade. Paediatr Anaesth. 2005;15(4):301-6.

[11] Howard R, Carter B, Curry J, Morton N, Rivett K, Rose M, et al. Analgesia review. Paediatr Anaesth. 2008;18:64-78.

[12] Bozkurt P, Arslan I, Bakan M, Cansever MS. Free plasma levels of bupivacaine and ropivacaine when used for caudal block in children. Eur J Anaesthesiol. 2005;22(8):640-1.

[13] Patel D. Epidural analgesia for children. Contin Educ Anaesth Crit Care Pain. 2006;6(2):63-6.

[14] Menzies R, Congreve K, Herodes V, Berg S, Mason DG. A survey of pediatric caudal extradural anesthesia practice. Paediatr Anaesth. 2009;19(9):829-36.

[15] Armitage EN. Local anaesthetic techniques for prevention of postoperative pain. Br J Anaesth. 1986;58(7):790-800.

[16] Willschke H, Marhofer P, Bösenberg A, Johnston S, Wanzel O, Cox SG, et al. Ultrasonography for ilioinguinal/iliohypogastric nerve blocks in children. Br J Anaesth. 2005;95(2):226.

[17] Thong SY, Lim SL, Ng ASB. Retrospective review of ilioinguinal-iliohypogastric nerve block with general anesthesia for herniotomy in ex-premature neonates. Paediatr Anaesth. 2011;21(11):1109-13.

[18] Markham SJ, Tomlinson J, Hain WR. Ilioinguinal nerve block in children. A comparison with caudal block for intra and postoperative analgesia. Anaesthesia. 1986;41(11):1098-103.

[19] Splinter WM, Bass J, Komocar L. Regional anaesthesia for hernia repair in children: local vs caudal anaesthesia. Can J Anaesth. 1995;42(3):197-200.

[20] Cross GD, Barrett RF. Comparison of two regional techniques for postoperative

analgesia in children following herniotomy and orchidopexy. Anaesthesia. 1987;42(8):845-9.

[21] Scott AD, Phillips A, White JB, Stow PJ. Analgesia following inguinal herniotomy or orchidopexy in children: a comparison of caudal and regional blockade. J R Coll Surg Edinb. 1989;34(3):143-5.

[22] Dalens B, Hasnaoui A. Caudal anesthesia in pediatric surgery: success rate and adverse effects in 750 consecutive patients. Anesth Analg. 1989;68(2):83-9.

[23] Lim S, Ng Sb A, Tan G. Ilioinguinal and iliohypogastric nerve block revisited: single shot versus double shot technique for hernia repair in children. Paediatr Anaesth.

2002;12(3):255.

[24] Jagannathan N, Sohn L, Sawardekar A, Ambrosy A, Hagerty J, Chin A, et al. Unilateral groin surgery in children: will the addition of an ultrasound-guided ilioinguinal nerve block enhance the duration of analgesia of a single-shot caudal block? Paediatr Anaesth. 2009;19(9):892-8.

[25] Beyaz S, Tokgöz O, Tüfek A. Caudal epidural block in children and infants: retrospective analysis of 2088 cases. Ann Saudi Med. 2011;31(5):494-7.

[26] Polaner DM, Taenzer AH, Walker BJ, Bosenberg A, Krane EJ, Suresh S, et al. Pediatric regional anesthesia network (PRAN): a multi-Institutional study of the use and incidence of complications of pediatric regional anesthesia. Anesth Analg. 2012;115(6):1353-64.

[27] Parvaiz MA, Korwar V, McArthur D, Claxton A, Dyer J, Isgar B. Large retroperitoneal haematoma: an unexpected complication of ilioinguinal nerve block for inguinal hernia repair. Anaesthesia. 2012;67(1):80-1.

[28] Amory C, Mariscal A, Guyot E, Chauvet P, Leon A, Poli-Merol ML. Is ilioinguinal/iliohypogastric nerve block always totally safe in children? Paediatr Anaesth. 2003;13(2):164-6.

[29] Jöhr M, Sossai R. Colonic puncture during ilioinguinal nerve block in a child. Anesth Analg. 1999;88(5):1051-2.

[30] Sarti A, Busoni P, Dellfoste C, Bussolin L. Incidence of vomiting in susceptible children under regional analgesia with two different anaesthetic techniques. Paediatr Anaesth. 2004;14(3):251-5.

[31] Geze S, Imamoglu M, Cekic B. Awake caudal anesthesia for inguinal hernia operations. Successful use in low birth weight neonates. Anaesthesist. 2011;60(9):841-4.

[32] Krane E, Haberkern C, Jacobson L. Postoperative apnea, bradycardia, and oxygen desaturation in formerly premature infants: prospective comparison of spinal and general anesthesia. Anesth Analg 1995;80:7-13.

[33] Somri M, Gaitini L, Vaida S, Collins G, Sabo E, Mogilner G. Postoperative outcome in high risk infants undergoing herniorrhaphy: comparison between spinal and general anaesthesia. Anaesthesia. 1998;53:762-6.

[34] Welborn L, Rice L, Hannallah R, Broadman L, Ruttiman U, Fink R. Postoperative apnea in former preterm infants: prospective comparison of spinal and general anesthesia. Anesthesiology 1990;72(838-42).

[35] Williams J, Stoddart P, Williams S, Wolf A. Post-operative recovery after inguinal herniotomy in ex-premature infants: comparison between sevoflurane and spinal anaesthesia. Br J Anaesth. 2001;86:366-71.

[36] Lacrosse D, Pirotte T, Veyckemans F. Bloc caudal associé à une anesthésie au masque facial (sévoflurane) chez le nourrisson à haut risque d’apnée : étude observationnelle. Ann Fr Anesth Reanim. 2012;31(1):29-33.

[37] Davidson AJ. Anesthesia and neurotoxicity to the developing brain: the clinical relevance. Paediatr Anaesth. 2011;21(7):716-21.

[38] Craven PD, Badawi N, Henderson-Smart DJ, O’Brien M. Regional (spinal, epidural, caudal) versus general anaesthesia in preterm infants undergoing inguinal herniorrhaphy in early infancy. Cochrane Database of Systematic Reviews. 2003(3).

[39] Bouchut JC, Dubois R, Foussat C, Moussa M, Diot N, Delafosse C, et al. Evaluation of caudal anaesthesia performed in conscious ex-premature infants for inguinal herniotomies. Paediatr Anaesth. 2001;11(1):55-8.

[40] Somri M, Tome R, Teszler CB, Vaida SJ, Mogilner J, Shneeifi A, et al. Does adding intravenous fentanyl to caudal block in children enhance the efficacy of multimodal analgesia as reflected in the plasma level of catecholamines? Eur J Anaesthesiol.

2007;24(5):408-13.

[41] Kokinsky E, Nilsson K, Larsson L. Increased incidence of postoperative nausea and vomiting without additional analgesic effects when a low dose of intravenous fentanyl is combined with a caudal block. Paediatr Anaesth. 2003;13:334-8.

[42] Khosravi MB, Khezri S, Azemati S. Tramadol for pain relief in children undergoing herniotomy: a comparison with ilioinguinal and iliohypogastric blocks. Paediatr Anaesth. 2006;16(1):54-8.

[43] Lloyd-Thomas A, Howard R. A pain service for children. Paediatr Anaesth. 1994;4:3-15.

[44] Mossetti V, Vicchio N, Ivani G. Local anesthetics and adjuvants in pediatric regional anesthesia. Curr Drug Targets. 2012;13(7):952-60.

[45] Shukla U, Prabhakar T, Malhotra K. Postoperative analgesia in children when using clonidine or fentanyl with ropivacaine given caudally. J Anaesthesiol, Clin Pharmacol. 2011;27(2):205-10.

[46] Tobias JD. Caudal epidural block: a review of test dosing and recognition of systemic injection in children. Anesth Analg. 2001;93(5):1156-61.

Introduction

Introduction

Introduction

Introduction