Venous thromboembolism, comprising deep vein thrombosis and pulmonary embolism, is a common disease process that accounts for significant morbidity and mortality in Australia. As the clinical features of venous thromboembolism can be non-specific, clinicians need to have a high index of suspicion for venous thromboembolism. Diagnosis primarily relies on a combination of clinical assessment, D-dimer testing and radiological investigation. Following an evidence-based algorithm for the investigation of suspected venous thromboembolism aims to reduce over investigation, whilst minimising the potential of missing clinically significant disease. Multiple risk factors for venous thromboembolism (VTE) exist; significant risk factors such as recent surgery, malignancy, acute medical illness, prior VTE and thrombophilia are common amongst both hospitalised patients and those in the community. Management of VTE is primarily anticoagulation and this has traditionally been with unfractionated or low molecular weight heparin and warfarin. The non-vitamin K antagonist oral anticoagulants, also known as the novel oral anticoagulants (NOACs), including rivaroxaban and dabigatran, represent an exciting alternative to traditional therapy for the prevention and management of VTE. The significant burden of venous thromboembolism is best reduced through a combination of prophylaxis, early diagnosis, rapid implementation of therapy and management of recurrence and potential sequelae. Junior doctors are in a position to identify patients at risk of VTE and prescribe thromboprophylaxis as necessary. Although a significant body of evidence exists to guide diagnosis and treatment of VTE, this article provides a concise summary of the pathophysiology, natural history, clinical features, diagnosis and management of VTE.

Introduction

Venous thromboembolism (VTE) is a disease process comprising deep vein thrombosis (DVT) and pulmonary embolism (PE). VTE is a common problem with an estimated incidence of one-two per 1,000 population each year [1,2] and approximately 2,000 Australians die each year from VTE. [3] PE represents one of the single most common preventable causes of in-hospital death [4] and acutely it has a 17% mortality rate. [5, 6] VTE is also associated with a significant financial burden; the financial cost of VTE in Australia in 2008 was an estimated $1.72 billion. [7] Several important sequelae of VTE exist including: post-thrombotic syndrome, recurrent VTE, chronic thromboembolic pulmonary hypertension (CTEPH) and death. [8,9]

Due to the high incidence of VTE and the potential for significant sequelae, it is imperative that medical students and junior doctors have a sound understanding of its pathophysiology, diagnosis and management of VTE.

Pathophysiology and risk factors

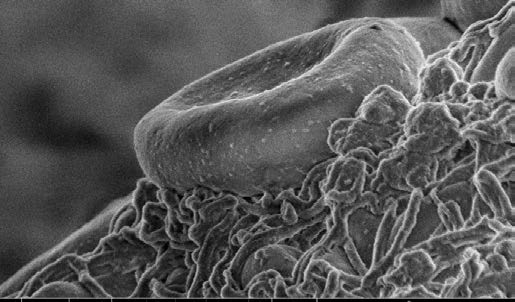

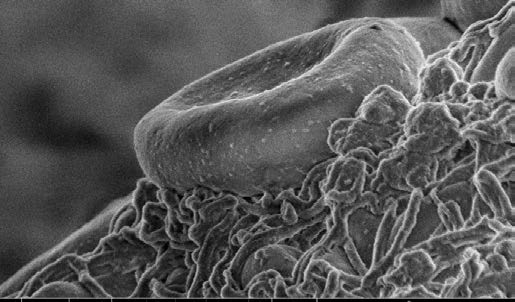

The pathogenesis of venous thrombosis is complex and our understanding of the disease is constantly evolving. Although no published literature supports that Virchow ever distinctly described a triad for the formation of venous thrombosis [10], Virchow’s triad remains clinically relevant when considering the pathogenesis of venous thrombosis. The commonly cited triad consists of alteration in the constituents of blood, vascular endothelial injury and alterations in blood flow. Extrapolation of each component of Virchow’s triad provides a framework for important VTE risk factors. Risk factors form an integral part of the scoring systems used in risk stratification of suspected VTE. In the community, risk factors are present in over 75% of patients, with recent or current hospitalisation or residence in a nursing home reported by over 50% ofpatients with VTE. [11] Patients may have a combination of inherited and acquired thrombophilic defects. Combinations of risk factors have at least an additive effect on the risk of VTE. Risk factors for VTE are presented in Table 1.

Thrombophilia

Thrombophilia refers to a predisposition to thrombosis, which may be inherited or acquired. [14] The prevalence of thrombophilia at first presentation of VTE is approximately 50%, with the highest prevalence found in younger patients and those with unprovoked VTE. [15] Inherited thrombophilias are common in the Caucasian Australian population. The birth prevalence of factor V Leiden heterozygosity and homozygosity, which confers resistance to activated protein C, is 9.5% and 0.7% respectively. Heterozygosity and homozygosity for the prothrombin gene mutation (G20210A) is another common inherited thrombophilia, with a prevalence of 4.1% and 0.2% respectively. [16] Other significant thrombophilias include antithrombin deficiency, protein C deficiency, protein S deficiency and causes of hyperhomocystinaemia. [16,17] Antiphospholipid syndrome is an acquired disorder characterised by antiphospholipid antibodies and arterial or venous thrombosis or obstetric related morbidity, including recurrent spontaneous abortion. Antiphospholipid syndrome represents an important cause of VTE and may occur as a primary disorder or secondary to autoimmune or rheumatic diseases such as systemic lupus erythematosus. [18]

Testing for hereditary thrombophilia is generally not recommended as it does not affect clinical management of most patients with VTE [19,20] and there is no evidence that such testing alters the risk of recurrent VTE. [21] There are few exceptions such as a fertile women with a family history of a thrombophilia where testing positive may lead to the decision to avoid the oral contraceptive pill or institute prophylaxis in the peripartum period. [22]

Natural history

Most DVT originate in the deep veins of the calf. Thrombi originating in the calf are often asymptomatic and confer a low risk of clinically significant PE. Approximately 25% of untreated calf DVT will extend into the proximal veins of the leg and 80% of patients with symptomatic DVT have involvement of the proximal veins. [9] Symptomatic PE occurs in a significant proportion of patients with untreated proximal DVT; however the exact risk of proximal embolisation is difficult to estimate. [9,23]

Pulmonary vascular remodeling may occur following PE and may result in CTEPH. [24] CTEPH is thought to be caused by unresolved pulmonary emboli and is associated with significant morbidity and mortality. CTEPH develops in approximately 1-4% of patients with treated PE. [25,26]

Post-thrombotic syndrome is an important potential long-term consequence of DVT, which is characterised by leg pain, oedema, venous ectasia and venous ulceration. Within 2 years of symptomatic DVT, post-thrombotic syndrome develops in 23-60% of patients [27] and is associated with poorer quality of life and significant economic burden. [28]

Diagnosis

Signs and symptoms of VTE are often non-specific and may mimic many other common clinical conditions (Table 2). In the primary care setting, less than 30% of patients with signs and symptoms suggestive of DVT have a sonographically proven thrombus. [29] Some of the clinical features of superficial thrombophlebitis overlap with those of DVT. Superficial thrombophlebitis carries a small risk of DVT or PE and contiguous extension of the thrombus. Treatment may be recommended with low-dose anticoagulant therapy or NSAIDs. [30]

Deep vein thrombosis

Clinical features

Symptoms of DVT include pain, cramping and heaviness in the lower extremity, swelling and a cyanotic or blue-red discolouration of the limb. [31] Signs may include superficial vein dilation, warmth and unilateral oedema. [31,32] Pain in the calf on forceful dorsiflexion of the foot was described as a sign of DVT by the American surgeon John Homans in 1944. [33] Homans’ sign is non-specific and is an unreliable sign of DVT. [34]

Investigations

Several scoring tools have been evaluated for assessing the pre-test probability of DVT. One such commonly used validated tool is the Modified Wells score, presented in Table 3. [35] The Modified Wells score categorises patients as either likely or unlikely to have a DVT.

D-dimer is the recommended investigation in patients considered unlikely to have a DVT, as a negative D-dimer effectively rules out DVT in this patient group. [36] D-dimer measurements have several important limitations with most studies of its use in DVT being performed in outpatients and non-pregnant patients. As D-dimer represents a fibrin degradation product, it is likely to be raised in any inflammatory response. This limits its use in post-operative patients and many hospitalised patients.

Venous compression ultrasound with Doppler flow is indicated as the initial investigation in patients who are considered likely to have DVT (Modified Wells ≥ 2) or in patients with a positive D-dimer. Compression ultrasonography is the most widely used imaging modality due to its high sensitivity and specificity, non-invasive nature and low cost. Limitations include operator-dependent accuracy and reduction of sensitivity and specificity in DVT of pelvic veins, small calf veins or in obese patients. [32]

Pulmonary embolism

Clinical features

10% of symptomatic PE are fatal within 1 hour of the onset of symptoms [9] and delay of diagnosis remains common due to non-specific presentation. [37] Clinical presentation will depend on several factors including size of the embolus, rapidity of obstruction of the pulmonary vascular bed and patient’s haemodynamic reserve. Symptoms may include sudden or gradual onset dyspnoea, chest pain, cough, haemoptysis, palpitations and syncope. Signs may include tachycardia, tachypnea, fever, cyanosis and the clinical features of DVT. Signs of pulmonary infarction may develop later and include a pleural friction rub and reduced breath sounds. [31] Patients may also present with systemic arterial hypotension with or without clinical features of obstructive shock. [5,38]

Investigations

The first step in the diagnosis of suspected PE is the calculation of the clinical pre-test probability using a validated tool such as the Wells or Geneva score. Clinician gestalt may be used in place of a validated scoring tool; however it may be associated with a lower specificity and therefore increased unnecessary pulmonary imaging. [39] Neither clinician gestalt nor a clinical decision rule can accurately exclude PE on its own. An electrocardiogram (ECG) will often be performed early in the presentation of a patient with suspected PE. A variety of electrocardiographic changes associated with acute PE have been described. Changes consistent with right heart strain and atrial enlargement reflect mechanical pulmonary artery outflow tract obstruction. [40] Other ECG changes include sinus tachycardia, ST segment or T wave abnormalities, QRS axis alteration (left or right), right bundle branch block and a number of others. [40] The S1Q3T3 abnormality, described as a prominent S wave in lead I with a Q wave and inverted T wave in lead III, is a sign of acute corpulmonale. It is not pathognomonic for PE and occurs in less than 25% of patients with acute PE. [40]

In patients with a low pre-test probability, a negative quantitative D-dimer effectively excludes PE. [39] The conventional D-Dimer cut- off value (500 µg/L) are associated with reduced specificity in older patients leading to false positive results. [41] A recent meta-analysis has found that the use of an age specific D-dimer cut off value (age x 10µg/L) increases the specificity of the D-dimer test with little effect on sensitivity. [42] The pulmonary embolism rule-out criteria (PERC), as outlined in Table 4, may be applied to patients with a low pre-test probability to reduce the number of patients undergoing D-dimer testing. [43] A recent meta-analysis demonstrated that in the emergency department, the combination of low pre-test probability and a negative PERC rule results in a likelihood of PE that is so unlikely that the risk-benefit ratio of further investigation for PE is not favourable. [44]

Patients with a high pre-test probability or with a positive D-dimer test should undergo pulmonary imaging. Multidetector Computed Tomography Pulmonary Angiography (CTPA) is largely considered the imaging modality of choice for PE given its high sensitivity and

specificity and its ability to identify alternative diagnoses. [45,46] CTPA

must be used only with a clear indication due to significant radiation

exposure, risk of allergic reactions and contrast-induced nephropathy.

[47] Concerns have also been raised about over diagnosis of PE with

detection of small subsegmental emboli. [48]

Ventilation-perfusion (V/Q) lung scintigraphy is an alternative pulmonary imaging modality to CTPA. A normal V/Q scan excludes PE; however, a significant proportion of patients will have a ‘non- diagnostic’ result thus requiring further imaging. [49] Non-diagnostic scans are more common in patients with pre-existing respiratory disease or an abnormal chest radiograph and are less likely in younger and pregnant patients. [48,50] Compared with CTPA, V/Q scanning is associated with fewer adverse effects and less radiation exposure and is often employed when a contraindication to CTPA exists. [49,50]

Bedside echocardiography is a useful investigation if CT is not immediately available or if the patient is too unstable for transfer to radiology. [51,52] Echocardiography may reveal right ventricular dysfunction which guides prognosis and the potential for thrombolytic therapy in massive and sub-massive PE. [51]

The diagnosis of PE during pregnancy is an area of controversy. [54] The diagnostic value of D-dimer during pregnancy using the conventional threshold is limited. With both V/Q scans and CTPA, foetal radiation dose is minimal but higher in the former. CTPA is associated with a much higher dose of radiation to maternal breast tissue thus increased risk of breast cancer. [53,54] In light of these risks, some experts advocate for bilateral compression Doppler ultrasound for suspected PE in pregnancy. [54] However, if this is negative and a high clinical suspicion remains, pulmonary imaging is still required.

Prophylaxis

Multiple guidelines exist to direct clinicians on the use of thromboprophylaxis in both medical and surgical patients. [3,55-58] Implementation of thromboprophylaxis involves assessment of the patient’s risk of VTE, risk of adverse effects of thromboprophylaxis, including bleeding and identification of any contraindications.

Patients at high risk include those undergoing any surgical procedure, especially abdominal, pelvic or orthopaedic surgery. Medical patients at high risk include those with myocardial infarction, malignancy, heart failure, ischaemic stroke and inflammatory bowel disease.[3]

Mechanical options for thromboprophylaxis include encouragement of mobility, graduated compression stockings, intermittent pneumatic compression devices and venous foot pumps. Mechanical prophylactic measures are often combined with pharmacological thromboprophylaxis. The strength of evidence for each of the anticoagulant varies depending on the surgical procedure or medical condition in question; however, unfractionated heparin (UFH) and low-molecular weight heparin (LMWH) remain the mainstay of VTE prophylaxis. [3] The NOACs, also referred to as direct oral anticoagulants, notably rivaroxaban, apixaban and dabigatran, have been studied most amongst the hip and knee arthroplasty patient groups, where they have been shown to be both efficacious and safe. [59-61] The use of aspirin for the prevention of VTE following orthopaedic surgery remains controversial, despite receiving a recommendation by recent guidelines. [62] It is recommended that pharmacological prophylaxis should be continued until the patient is fully mobile. In certain circumstances such as following total hip or knee arthroplasty and hip fracture surgery, extended duration prophylaxis for up to 35 days post- operatively is recommended. [3,62]

Management

The aim of treatment is to relieve current symptoms, prevent

progression of the disease, reduce the potential for sequelae and

prevent recurrence. Anticoagulation remains the cornerstone of

management of VTE.

Patients with PE and haemodynamic instability (hypotension, persistent

bradycardia, pulselessness), so called ‘massive PE’may require urgent

treatment with thrombolytic therapy. Thrombolysis reduces mortality

in haemodynamically unstable patients, however it is associated with a

risk of major bleeding. [63] Surgical thrombectomy and catheter-based

interventions represent an alternative to thrombolysis in patients withThe aim of treatment is to relieve current symptoms, prevent progression of the disease, reduce the potential for sequelae and massive PE where contraindications exist. [64] The use of thrombolytic therapy in patients with evidence of right ventricular dysfunction and myocardial injury without hypotension and haemodynamic instability remains controversial. A recent study revealed that fibrinolysis in this intermediate risk group reduces rates of haemodynamic compromise while significantly increasing the risk of intracranial and other major bleeding. [65]

For the majority of patients with VTE anticoagulation is the mainstay of treatment. Acute treatment involves UFH, LMWH or fondaparinux. [50]

UFH binds to antithrombin III, increasing its ability to inactivate thrombin, factor Xa and other coagulation factors. [66] UFH is usually given as an intravenous bolus initially, followed by a continuous infusion. UFH therapy requires monitoring of the activated partial thromboplastin time (aPTT) and is associated with a risk of heparin- induced thrombocytopenia. [66] The therapeutic aPTT range and dosing regimen vary between institutions. The use of UFH is usually preferred if there is severe renal impairment, in cases where there may be a requirement to rapidly reverse anticoagulation therapy and in obstructive shock where thrombolysis is being considered. [50]

LMWH is administered subcutaneously in a weight adjusted dosing regimen once or twice daily. [51] When compared with UFH, LMWH has a more predictable anticoagulant response and does not usually require monitoring. [67] In obese patients and those with significant renal dysfunction, LMWH may require dose adjustment or monitoring of factor Xa activity. [67]

Therapy with a vitamin K antagonist, most commonly warfarin, should be commenced at the same time as parenteral anticoagulation. Therapy with the parenteral anticoagulant should be discontinued when the international normalised ratio (INR) has reached at least 2.0 on two consecutive measurements and there has been an overlap of treatment with a parenteral anticoagulant for at least five days. [68] This overlap is required as the use of warfarin alone may be associated with an initial transient prothrombotic state due to warfarin mediated rapid depletion of the natural anticoagulant protein C, whilst depletion of coagulation factors II and X takes several days. [69]

The NOACs represent an attractive alternative to traditional anticoagulants for the prevention and management of VTE.

Rivaroxaban is a direct oral anticoagulant that directly inhibits factor Xa.[66] Rivaroxaban has been shown to be as efficacious as standard therapy (parenteral anticoagulation and warfarin) for the treatment of proximal DVT and symptomatic PE. [70,71] When compared with conventional therapy, rivaroxaban may be associated with lower risks of major bleeding. [70] Rivaroxaban represents an attractive alternative to the standard therapy mentioned above as it does not require parenteral administration, is given as a fixed daily dose, does not require laboratory monitoring and has few drug-drug and food interactions. [70,71]

Dabigatran etexilate is an orally administered direct thrombin inhibitor. Dabigatran is non-inferior to warfarin for the treatment of PE and proximal DVT after a period of parenteral anticoagulation. [72] The safety profile is similar; however dabigatran requires no laboratory

monitoring. [72]

The lack of a requirement for monitoring is a significant benefit over

warfarin for the NOACs. The role of monitoring the anticoagulant

activity of these agents and the clinical relevance of monitoring is a

subject of ongoing research and debate. [73] Anti-factor Xa based

assays may be used to determine the concentration of the anti-factor

Xa inhibitors in specific clinical circumstances. [74,75] The relative

intensity of anticoagulant due to dabigatran can be estimated by

the aPTT and rivaroxaban by the PT or aPTT [76]. There is however,

significant variation in the results based on the reagent the laboratory

uses. Routine monitoring for the NOACs is not currently recommended.

The major studies evaluating the NOACs carried exclusion criteria that included those at high risk of bleeding, with a creatinine clearance of <30 mL/min, pregnancy and those with liver disease [70-72], thus caution must be applied with their use in these patient groups. The NOACs are renally metabolised to variable degrees. Warfarin or dose adjusted LMWH are preferred for those with reduced renal function (creatinine clearance <30mL/min) who require long-term anticoagulation.

Concern exists regarding a lack of a specific reversal agent for the NOACs. [77,78] Consultation with haematology is recommended if significant bleeding occurs during therapy with a NOAC. Evidence for the use of agents such as tranexamic acid, recombinant factor VIIa and prothrombin complex concentrate is very limited. [77,78] Haemodialysis may significantly reduce plasma levels of dabigatran, as the drug displays relatively low protein binding. [77,78]

Inferior vena cava (IVC) filters may be placed in patients with VTE and a contraindication to anticoagulation. IVC filters prevent PE however they may increase the risk of DVT and vena cava thrombosis. The use of IVC filters remains controversial due to a lack of evidence. [79]

Recurrence

The risk of recurrence differs significantly depending on whether the initial VTE event was unprovoked or associated with a transient risk factor. [9] Patients with idiopathic VTE have a significantly higher risk of recurrence than those with transient risk factors. Isolated calf DVT carry a lower risk of recurrence than that of proximal DVT or PE. The risk of recurrence after the cessation of anticoagulant therapy is as high as 10% per year in some patients groups. [9]

Duration of anticoagulation therapy should be based on patient preference and a risk-benefit analysis of the risk of recurrence versus the risk of complications from therapy. Generally, anticoagulation should be continued for a minimum of three months and the decision to continue anticoagulation should be re-assessed on a regular basis. Recommendations for duration of anticoagulation therapy are presented in Table 5. The currently published guidelines recommend extended anticoagulation therapy with a vitamin K antagonist such as warfarin. Evaluation of the new direct oral anticoagulants for therapy and prevention of recurrence is ongoing. Recent evidence supports the use of dabigatran and rivaroxaban for the secondary prevention of venous thromboembolism with similar efficacy to standard therapy and reduced rates of major bleeding. [70,71,80]

Aspirin has been shown to be effective in reducing the recurrence VTE in patients with previous unprovoked VTE. After up to 18 months of therapy, aspirin reduces the rate of VTE recurrence by 40%, as compared with placebo. [81]

Acknowledgements

I would like to thank Marianne Turner for her help with editing of the manuscript.

Conflict of interest

None declared.

Correspondence

R Pow: richardeamonpow@gmail.com

Conclusion

VTE is a commonly encountered problem and is associated with significant short and long term morbidity. A sound understanding of the pathogenesis of VTE guides clinical assessment, diagnosis and management. The prevention and management of VTE continues to evolve with the ongoing evaluation of the NOACs. Anticoagulation remains the mainstay of therapy for VTE, with additional measures including thrombolysis used in select cases. This article has provided medical students with an evidence based review of the current diagnostic and management strategies for venous thromboembolic disease.

References

[1] Oger E. Incidence of venous thromboembolism: a community-based study in Western France. Thromb Haemost. 2000;83(5):657-60.

[2] Cushman M, Tsai AW, White RH, Heckbert SR, Rosamond WD, Enright P, et al. Deep vein thrombosis and pulmonary embolism in two cohorts: the longitudinal investigation of thromboembolism etiology. Am J Med. 2004;117(1):19-25.

[3] National Health and Medical Research Council. Clinical Practice Guideline for the Prevention of Venous Thromboembolism in Patients Admitted to Australian Hospitals. Melbourne: National Health and Medical Research Council; 2009. 157 p.

[4] National Institute of Clinical Studies. Evidence-Practice Gaps Report Volume 1. Melbourne:National Institute of Clinical Studies; 2003. 38 p.

[5] Goldhaber SZ, Visani L, De Rosa M. Acute pulmonary embolism: clinical outcomes in the International Cooperative Pulmonary Embolism Registry (ICOPER). Lancet. 1999;353(9162):1386-9.

[6] Huang CM, Lin YC, Lin YJ, Chang SL, Lo LW, Hu YF, et al. Risk stratification and clinical outcomes in patients with acute pulmonary embolism. Clin Biochem. 2011;44(13):1110-5.

[7] Access Economics Pty Ltd. The burden of venous thromboembolism in Australia. Canberra: Access Economics Pty Ltd; 2008. 50 p.

[8] Lang IM, Klepetko W. Chronic thromboembolic pulmonary hypertension: an updated review. Curr Opin Cardiol. 2008;23(6):555-9.

[9] Kearon C. Natural history of venous thromboembolism. Circulation. 2003;107(23 Suppl 1):22-30.

[10] Dickinson BC. Venous thrombosis: On the History of Virchow’s Triad. Univ Toronto Med J. 2004;81(3):6.

[11] Heit JA, O’Fallon WM, Petterson TM, Lohse CM, Silverstein MD, Mohr DN, et al. Relative impact of risk factors for deep vein thrombosis and pulmonary embolism: a population-based study. Arch Intern Med. 2002;162(11):1245-8.

[12] Anderson FA, Jr., Spencer FA. Risk factors for venous thromboembolism. Circulation. 2003;107(23 Suppl 1):9-16.

[13] Ho WK, Hankey GJ, Lee CH, Eikelboom JW. Venous thromboembolism: diagnosis and management of deep venous thrombosis. Med J Aust. 2005;182(9):476-81.

[14] Heit JA. Thrombophilia: common questions on laboratory assessment and management. Hematology Am Soc Hematol Educ Program. 2007;1:127-35.

[15] Weingarz L, Schwonberg J, Schindewolf M, Hecking C, Wolf Z, Erbe M, et al. Prevalence of thrombophilia according to age at the first manifestation of venous thromboembolism: results from the MAISTHRO registry. Br J Haematol. 2013;163(5):655-65.

[16] Gibson CS, MacLennan AH, Rudzki Z, Hague WM, Haan EA, Sharpe P, et al. The prevalence of inherited thrombophilias in a Caucasian Australian population. Pathology. 2005;37(2):160-3.

[17] Genetics Education in Medicine Consortium. Genetics in Family Medicine: The Australian Handbook for General Practitioners. Canberra: The Australian Government Agency Biotechnology Australia; 2007. 349 p.

[18] Giannakopoulos B, Krilis SA. The pathogenesis of the antiphospholipid syndrome. N Engl J Med. 2013;368(11):1033-44.

[19] Ho WK, Hankey GJ, Eikelboom JW. Should adult patients be routinely tested for heritable thrombophilia after an episode of venous thromboembolism? Med J Aust. 2011;195(3):139-42.

[20] Middeldorp S. Evidence-based approach to thrombophilia testing. J Thromb Thrombolysis. 2011;31(3):275-81.

[21] Cohn DM, Vansenne F, de Borgie CA, Middeldorp S. Thrombophilia testing for prevention of recurrent venous thromboembolism. Cochrane Database Syst Rev[Internet] 2012 [Cited 2014 Sep 10]. Available from: http://www.ncbi.nlm.nih.gov/pubmed/23235639

[22] Middeldorp S, van Hylckama Vlieg A. Does thrombophilia testing help in the clinical management of patients? Br J Haematol. 2008;143(3):321-35.

[23] Markel A. Origin and natural history of deep vein thrombosis of the legs. Semin Vasc Med. 2005;5(1):65-74.

[24] Hoeper MM, Mayer E, Simonneau G, Rubin LJ. Chronic thromboembolic pulmonary hypertension. Circulation. 2006;113(16):2011-20.

[25] Pengo V, Lensing AW, Prins MH, Marchiori A, Davidson BL, Tiozzo F, et al. Incidence of chronic thromboembolic pulmonary hypertension after pulmonary embolism. N Engl J Med. 2004;350(22):2257-64.

[26] Poli D, Grifoni E, Antonucci E, Arcangeli C, Prisco D, Abbate R, et al. Incidence of recurrent venous thromboembolism and of chronic thromboembolic pulmonary hypertension in patients after a first episode of pulmonary embolism. J Thromb Thrombolysis. 2010;30(3):294-9.

[27] Ashrani AA, Heit JA. Incidence and cost burden of post-thrombotic syndrome. J Thromb Thrombolysis. 2009;28(4):465-76.

[28] Kachroo S, Boyd D, Bookhart BK, LaMori J, Schein JR, Rosenberg DJ, et al. Quality of life and economic costs associated with postthrombotic syndrome. Am J Health Syst Pharm. 2012;69(7):567-72.

[29] Oudega R, Moons KG, Hoes AW. Limited value of patient history and physical examination in diagnosing deep vein thrombosis in primary care. Fam Pract. 2005;22(1):86-91.

[30] Di Nisio M, Wichers IM, Middeldorp S. Treatment for superficial thrombophlebitis of the leg. Cochrane Database Syst Rev [Internet] 2013 [Cited 2014 Sep 10]. Available from: http://www.ncbi.nlm.nih.gov/pubmed/23633322

[31] Bauersachs RM. Clinical presentation of deep vein thrombosis and pulmonary embolism. Best Pract Res Clin Haematol. 2012;25(3):243-51.

[32] Ramzi DW, Leeper KV. DVT and pulmonary embolism: Part I. Diagnosis. Am Fam physician. 2004;69(12):2829-36.

[33] Homans J. Diseases of the veins. N Engl J Med. 1946;235(5):163-7.

[34] Cranley JJ, Canos AJ, Sull WJ. The diagnosis of deep venous thrombosis. Fallibility of clinical symptoms and signs. Arch Surg. 1976;111(1):34-6.

[35] Wells PS, Anderson DR, Bormanis J, Guy F, Mitchell M, Gray L, et al. Value of assessment of pretest probability of deep-vein thrombosis in clinical management. Lancet. 1997;350(9094):1795-8.

[36] Wells PS, Anderson DR, Rodger M, Forgie M, Kearon C, Dreyer J, et al. Evaluation of D-dimer in the diagnosis of suspected deep-vein thrombosis. N Engl J Med. 2003;349(13):1227-35.

[37] Torres-Macho J, Mancebo-Plaza AB, Crespo-Gimenez A, Sanz de Barros MR, Bibiano-Guillen C, Fallos-Marti R, et al. Clinical features of patients inappropriately undiagnosed of pulmonary embolism. Am J Emerg Med. 2013;31(12):1646-50.

[38] Grifoni S, Olivotto I, Cecchini P, Pieralli F, Camaiti A, Santoro G, et al. Short-term clinical outcome of patients with acute pulmonary embolism, normal blood pressure, and echocardiographic right ventricular dysfunction. Circulation. 2000;101(24):2817-22.

[39] Lucassen W, Geersing GJ, Erkens PM, Reitsma JB, Moons KG, Buller H, et al. Clinical decision rules for excluding pulmonary embolism: a meta-analysis. Ann Intern Med. 2011;155(7):448-60.

[40] Ullman E, Brady WJ, Perron AD, Chan T, Mattu A. Electrocardiographic manifestations of pulmonary embolism. Am J Emerg Med. 2001;19(6):514-9.

[41] Douma RA, Tan M, Schutgens RE, Bates SM, Perrier A, Legnani C, et al. Using an age-dependent D-dimer cut-off value increases the number of older patients in whom deep vein thrombosis can be safely excluded. Haematologica. 2012;97(10):1507-13.

[42] Schouten HJ, Geersing GJ, Koek HL, Zuithoff NP, Janssen KJ, Douma RA, et al. Diagnostic accuracy of conventional or age adjusted D-dimer cut-off values in older patients with suspected venous thromboembolism: systematic review and meta-analysis. BMJ. 2013;346(2492): 1-13.

[43] Kline JA, Mitchell AM, Kabrhel C, Richman PB, Courtney DM. Clinical criteria to prevent unnecessary diagnostic testing in emergency department patients with suspected pulmonary embolism. J Thromb Haemost. 2004;2(8):1247-55.

[44] Singh B, Mommer SK, Erwin PJ, Mascarenhas SS, Parsaik AK. Pulmonary embolism rule-out criteria (PERC) in pulmonary embolism–revisited: a systematic review and meta-analysis. Emerg Med J. 2013;30(9):701-6.

[45] den Exter PL, Klok FA, Huisman MV. Diagnosis of pulmonary embolism: Advances and pitfalls. Best Pract Res Clin Haematol. 2012;25(3):295-302.

[46] Stein PD, Fowler SE, Goodman LR, Gottschalk A, Hales CA, Hull RD, et al. Multidetector computed tomography for acute pulmonary embolism. N Engl J Med. 2006;354(22):2317-27.

[47] Mitchell AM, Jones AE, Tumlin JA, Kline JA. Prospective study of the incidence of contrast-induced nephropathy among patients evaluated for pulmonary embolism by contrast-enhanced computed tomography. Acad Emerg Med. 2012;19(6):618-25.

[48] Huisman MV, Klok FA. How I diagnose acute pulmonary embolism. Blood. 2013;121(22):4443-8.

[49] Anderson DR, Kahn SR, Rodger MA, Kovacs MJ, Morris T, Hirsch A, et al. Computed tomographic pulmonary angiography vs ventilation-perfusion lung scanning in patients with suspected pulmonary embolism: a randomized controlled trial. J Am Med Assoc. 2007;298(23):2743-53.

[50] Takach Lapner S, Kearon C. Diagnosis and management of pulmonary embolism. BMJ. 2013 Feb 20;346(757):1-9.

[51] Agnelli G, Becattini C. Acute pulmonary embolism. N Engl J Med. 2010;363(3):266-74.

[52] Konstantinides S. Clinical practice. Acute pulmonary embolism. N Engl J Med. 2008;359(26):2804-13.

[53] Duran-Mendicuti A, Sodickson A. Imaging evaluation of the pregnant patient with suspected pulmonary embolism. Int J Obstet Anesth. 2011;20(1):51-9.

[54] Arya R. How I manage venous thromboembolism in pregnancy. Br J Haematol. 2011;153(6):698-708.

[55] National Health and Medical Research Council. Prevention of Venous Thromboembolism in Patients Admitted to Australian Hospitals: Guideline Summary. Melbourne: National Health and Medical Research Council; 2009. 2 p.

[56] Australian Orthopaedic Association. Guidelines for VTE Prophylaxis for Hip and Knee Arthroplasty. Sydney: Australian Orthopaedic Association; 2010. 8 p.

[57] The Australia & New Zealand Working Party on the Management and Prevention of Venous Thromboembolism. Prevention of Venous Thrombormbolism: Best practice guidelines for Australia & New Zealand, 4th edition. South Austrlaia: Health Education and Management Innovations;2007. 11 p.

[58] Geerts WH, Bergqvist D, Pineo GF, Heit JA, Samama CM, Lassen MR, et al. Prevention of venous thromboembolism: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines (8th Edition). Chest. 2008;133(6 Suppl):381-453.

[59] Eriksson BI, Borris LC, Friedman RJ, Haas S, Huisman MV, Kakkar AK, et al. Rivaroxaban versus enoxaparin for thromboprophylaxis after hip arthroplasty. N Engl J Med. 2008;358(26):2765-75.

[60] Lassen MR, Ageno W, Borris LC, Lieberman JR, Rosencher N, Bandel TJ, et al. Rivaroxaban versus enoxaparin for thromboprophylaxis after total knee arthroplasty. N Engl J Med. 2008;358(26):2776-86.

[61] Eriksson BI, Dahl OE, Rosencher N, Kurth AA, van Dijk CN, Frostick SP, et al. Dabigatran etexilate versus enoxaparin for prevention of venous thromboembolism after total hip replacement: a randomised, double-blind, non-inferiority trial. Lancet. 2007;370(9591):949-56.

[62] Falck-Ytter Y, Francis CW, Johanson NA, Curley C, Dahl OE, Schulman S, et al. Prevention of VTE in orthopedic surgery patients: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest. 2012;141(2 Suppl):278-325.

[63] Wan S, Quinlan DJ, Agnelli G, Eikelboom JW. Thrombolysis compared with heparin for the initial treatment of pulmonary embolism: a meta-analysis of the randomized controlled trials. Circulation. 2004;110(6):744-9.

[64] Jaff MR, McMurtry MS, Archer SL, Cushman M, Goldenberg N, Goldhaber SZ, et al. Management of massive and submassive pulmonary embolism, iliofemoral deep vein thrombosis, and chronic thromboembolic pulmonary hypertension: a scientific statement from the American Heart Association. Circulation. 2011;123(16):1788-830.

[65] Meyer G, Vicaut E, Danays T, Agnelli G, Becattini C, Beyer-Westendorf J, et al. Fibrinolysis for patients with intermediate-risk pulmonary embolism. N Engl J Med. 2014;370(15):1402-11.

[66] Mavrakanas T, Bounameaux H. The potential role of new oral anticoagulants in the prevention and treatment of thromboembolism. Pharmacol. Ther. 2011 Dec 24;130(1):46-58.

[67] Bounameaux H, de Moerloose P. Is laboratory monitoring of low-molecular-weight heparin therapy necessary? No. J Thromb Haemost. 2004 Apr;2(4):551-4.

[68] Goldhaber SZ, Bounameaux H. Pulmonary embolism and deep vein thrombosis. Lancet. 2012 Apr 10;379(9828):1835-46.

[69] Choueiri T, Deitcher SR. Why shouldn’t we use warfarin alone to treat acute venous thrombosis? Cleve Clin J Med. 2002;69(7):546-8.

[70] Buller HR, Prins MH, Lensin AW, Decousus H, Jacobson BF, Minar E, et al. Oral rivaroxaban for the treatment of symptomatic pulmonary embolism. N Engl J Med. 2012;366(14):1287-97.

[71] Landman GW, Gans RO. Oral rivaroxaban for symptomatic venous thromboembolism. N Engl J Med. 2011;364(12):1178.

[72] Schulman S, Kearon C, Kakkar AK, Mismetti P, Schellong S, Eriksson H, et al. Dabigatran versus warfarin in the treatment of acute venous thromboembolism. N Engl J Med. 2009;361(24):2342-52

[73] Schmitz EM, Boonen K, van den Heuvel DJ, van Dongen JL, Schellings MW, Emmen JM, et al. Determination of dabigatran, rivaroxaban and apixaban by UPLC-MS/MS and coagulation assays for therapy monitoring of novel direct oral anticoagulants. J Thromb Haemost. Forthcoming 2014 Aug 20.

[74] Lippi G, Ardissino D, Quintavalla R, Cervellin G. Urgent monitoring of direct oral anticoagulants in patients with atrial fibrillation: a tentative approach based on routine laboratory tests. J Thromb Thrombolysis. 2014;38(2):269-74.

[75] Samama MM, Contant G, Spiro TE, Perzborn E, Le Flem L, Guinet C, et al. Laboratory assessment of rivaroxaban: a review. Thromb J. 2013;11(1):11.

[76] Baglin T, Keeling D, Kitchen S. Effects on routine coagulation screens and assessment of anticoagulant intensity in patients taking oral dabigatran or rivaroxaban: guidance from the British Committee for Standards in Haematology. Br J Haematol. 2012;159(4):427-9.

[77] Jackson LR, 2nd, Becker RC. Novel oral anticoagulants: pharmacology, coagulation measures, and considerations for reversal. J Thromb Thrombolysis. 2014;37(3):380-91

[78] Wood P. New oral anticoagulants: an emergency department overview. Emerg MedAustralas. 2013;25(6):503-14.

[79] Rajasekhar A, Streiff MB. Vena cava filters for management of venous thromboembolism: a clinical review. Blood rev. 2013;27(5):225-41.

[80] Schulman S, Kearon C, Kakkar AK, Schellong S, Eriksson H, Baanstra D, et al. Extended use of dabigatran, warfarin, or placebo in venous thromboembolism. N Engl J Med. 2013;368(8):709-18.

[81] Becattini C, Agnelli G, Schenone A, Eichinger S, Bucherini E, Silingardi M, et al. Aspirin for preventing the recurrence of venous thromboembolism. N Engl J Med. 2012;366(21):1959-67.

[82] Kearon C, Akl EA, Comerota AJ, Prandoni P, Bounameaux H, Goldhaber SZ, et al. Antithrombotic therapy for VTE disease: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest. 2012;141(2 Suppl):419s-94s.

It is with great pleasure that I welcome you to Volume 6, Issue 1 of the Australian Medical Student Journal (AMSJ); the national peer-reviewed journal for medical students. The AMSJ serves two purposes: firstly, to provide a stepping-stone for medical students wishing to advance their skills in academic writing and publication; and secondly, to inform Australian medical students of important news relating to medical education and changes in medical care. This issue of the AMSJ showcases an array of research, reviews, and opinions that address a wide range of contemporary subjects. In particular, there is a trend for articles on translational research and national healthcare matters.

It is with great pleasure that I welcome you to Volume 6, Issue 1 of the Australian Medical Student Journal (AMSJ); the national peer-reviewed journal for medical students. The AMSJ serves two purposes: firstly, to provide a stepping-stone for medical students wishing to advance their skills in academic writing and publication; and secondly, to inform Australian medical students of important news relating to medical education and changes in medical care. This issue of the AMSJ showcases an array of research, reviews, and opinions that address a wide range of contemporary subjects. In particular, there is a trend for articles on translational research and national healthcare matters.